Harvard UP, 2016, 300 pages

Do Not Name Your Well After a Cursed Town Destroyed by Capitalism

When you’re planning to drill 5.5 kilometres down from sea level into the payzone where explosive, scalding gases charged at 14,000 psi are waiting to blow up your rig, you would think you’d name the well something auspicious. You know, something like “Lucky 7.” But no, BP decided to name their well “Macondo.” Macondo is a cursed town which was destroyed by capitalism. With a name like that, you’re just asking to be struck down.

Do Not Celebrate Mission Accomplished Too Soon

On the day of the blowout, BP and Transocean VIPs came aboard the rig to celebrate the Deepwater Horizon’s stellar safety record: zero lost time in seven years. Less than an hour before the blowout, they were finishing up a meeting. The last topic was a question tabled by the BP vice-president: “Why do you think this rig performs as well as it does?”

The scene from Deepwater Horizon’s last day reminds me of the plays the ancient Greek wrote. When Agamemnon, the Greek king comes home after winning the Trojan War, he feels confident enough to tread the purple as he alights from his victorious chariot. This act–not unlike the VP asking: “Why is this rig so great?”–seals Agamemnon’s doom. Have we not learned that we are in the most danger when we are the most confident?

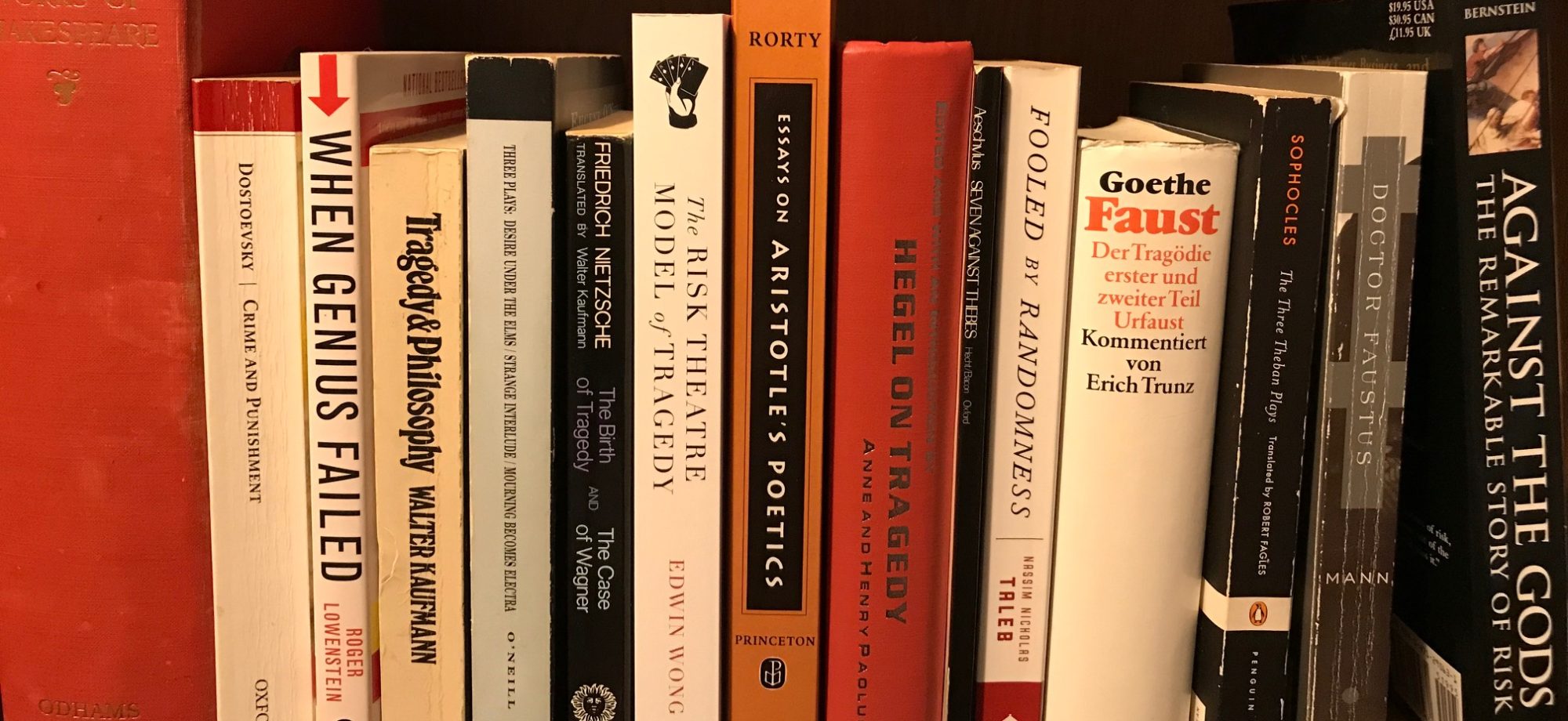

I think we all could benefit from going to the theatre once in awhile. Aeschylus’ Oresteia or Shakespeare’s Macbeth, two plays where the hero is most confident before the fall, are recommended watching for MBA candidates, engineers, and systems analysts. These plays dramatize real-world risks that the equations and formulas don’t tell you: do not celebrate mission accomplished too soon. In fact, even after the mission is accomplished, keep your celebrations lean. Some of the things they don’t teach at business school they teach at a theatre near you.

The Edge

During drilling, a dangerous event known as a “kick” happens when pressurized hydrocarbons enter the well. They shoot up the riser and blowout the rig. To isolate the hydrocarbons from the rig, drillers keep a column of mud between the rig and the hydrocarbons. The weight of the mud in the well, which can vary from 8.5 to 22 pounds per gallon, counteracts the pressurized hydrocarbons at the bottom of the well.

Mud used to be just that: mud. Today mud is a base fluid mixed with a heavy mineral such as barite. Too little mud, and the hydrocarbons can come up the riser. Too much mud, however, results in another dangerous situation called “lost returns.” The diameter of the well varies from three feet at the top down to just less than a foot at the bottom. The sides of the well are fragile. If the weight of the mud is too heavy, it breaks apart the sides of the well, and the mud is irrevocably lost beneath the sea bed. When the mud is lost beneath the sea bed, the hydrocarbons can enter the riser, blowing out the rig.

Boebert and Blossom refer to the art of keeping a well safe as staying on the right side of “the edge.” When a well control situation such as a kick or lost returns happen, the risk of going over the edge rears its ugly head. They quote Hunter S. Thompson on what it means to go over the edge:

Hunter S. Thompson likened the transition of a situation or system into disaster to what can occur when a motorcyclist seeking high-speed thrill rides along a twisting, dangerous highway:

“The Edge … There is no honest way to explain it because the only people who really know where it is are the ones who have gone over.”

Complexity or n(n-1)/2

After a well is drilled, the rig caps the well. At a later date, another specialized rig comes to set up the well for production. To cap a well is a complex procedure with many moving parts. To illustrate how adding tasks to the procedure quickly increases the complexity, Boebert and Blossom site a fascinating formula:

Planning for the abandonment of Macondo was extremely complex. The fundamental source of that complexity was a phenomenon well known to systems engineers: the number of potential pairwise interactions among a set of N elements grows as N times N-1, divided by 2. That means that if there are two elements in the set, there is one potential interaction; if there are five elements, there are ten possible interactions; ten elements, and there are forty-five; and so forth. If the interactions are more complex, such as when more than two things combine, the number is larger. Every potential interaction does not usually become an actual one, but adding elements to a set means that complexity grows much more rapidly than ordinary intuition would expect.

I find complexity fascinating because it leads to “emergent events.” Emergent events, write Boebert and Blossom, arise “from a combination of decisions, actions, and attributes of a system’s components, rather than from a single act.” Emergent events are part of a scholarly mindset which adopts a systems perspective of looking at events. Boebert and Blossom’s book adopts such a model, which is opposed to the judicial model of looking at the Macondo disaster. The judicial approach is favoured when trying to assign blame: the series of events leading to the disaster are likened to a row of dominoes which can be traced back to a blameworthy act.

Unlike Boebert and Blossom, I study literary theory, not engineering. But, like Boebert and Blossom, I find emergent events of the utmost interest. I’ve written a theory of drama called “risk theatre” that makes risk the pivot of the action. In drama, playwrights entertain theatregoers by dramatizing unexpected outcomes or unintended consequences. These unexpected outcomes can be the product of fate, the gods, or miscalculations on the part of the characters. But another way to draw out unexpectation from the story is to add complexity. That is to say, if there are two events in the play, there is one potential interaction; if there are five events, there are ten possible interactions; ten events, and there are forty-five. The trick for playwrights is this: how many events can you juggle and keep the narrative intact?

Luxuriant Retrospective Position

“Luxuriant retrospective position” is Boebert and Blossom’s term for “armchair quarterback.” They acknowledge that the project managers, drillers, and engineers were not operating from a luxuriant retrospective position. Many of them were doing the best that they could with an incomplete understanding. Often, when I read books breaking down disasters, the writers point fingers from their armchair perspective. This, to me, smacks of the same hubris they assign to their targets. It is like saying you would have a military historian rather than Napoleon fighting all your battles because the military historian can see things that Napoleon could not. It is quite decent of Boebert and Blossom to acknowledge how they are looking at things from hindsight.

I’m writing this in the midst of this coronavirus pandemic. In a year down the road, the books will start hitting the shelves telling us what we did wrong, telling us how we could have saved lives, and telling us how, if we had looked at things rationally, we could have done so easily. Many people will read these books, and parrot them. Will you be one of these people? Who would you rather fight your wars, the general Napoleon, or the historian Edward Gibbon? Who would you rather manage your money, John Meriwether (architect of a doomed hedge fund) or journalist Roger Lowenstein (who wrote a book exposing the errors of the doomed hedge fund)? Would you rather have BP’s team run the oil rig, or Boebert and Blossom? If you had said Gibbon, Lowenstein, and Boebert and Blossom, think again. Can those who understand backwards also act forwards? There is actually a story, a true story of how Napoleon appointed the mathematician Laplace to be the minister of the interior. Laplace, like Gibbon, Lowenstein, and Boebert and Blossom, would have taken a scientific approach to administration. Good, you say? No. Napoleon fired him for “carrying the spirit of infinitesimal into administration.” There is a tragedy in how those who understand backwards cannot act forwards.

Book Blurb

On April 20, 2010, the crew of the floating drill rig Deepwater Horizon lost control of the Macondo oil well forty miles offshore in the Gulf of Mexico. Escaping gas and oil ignited, destroying the rig, killing eleven crew members, and injuring dozens more. The emergency spiraled into the worst human-made economic and ecological disaster in Gulf Coast history.

Senior systems engineers Earl Boebert and James Blossom offer the most comprehensive account to date of BP’s Deepwater Horizon oil spill. Sifting through a mountain of evidence generated by the largest civil trial in U.S. history, the authors challenge the commonly accepted explanation that the crew, operating under pressure to cut costs, made mistakes that were compounded by the failure of a key safety device. This explanation arose from legal, political, and public relations maneuvering over the billions of dollars in damages that were ultimately paid to compensate individuals and local businesses and repair the environment. But as this book makes clear, the blowout emerged from corporate and engineering decisions which, while individually innocuous, combined to create the disaster.

Rather than focusing on blame, Boebert and Blossom use the complex interactions of technology, people, and procedures involved in the high-consequence enterprise of offshore drilling to illustrate a systems approach which contributes to a better understanding of how similar disaster emerge and how they can be prevented.

Author(s) Blurb

Earl Boebert is a retired Senior Scientist at the Sandia National Laboratories.

James M. Blossom gained his engineering experience at Los Alamos National Laboratory and the General Electric Corporation.

Until next time, I’m Edwin Wong, and I’m doing Melpomene’s work.